System Logs: 7 Powerful Insights Every Tech Pro Must Know

Ever wondered what’s really happening behind the scenes of your computer or server? System logs hold the answers—silent witnesses to every action, error, and event in your digital environment.

What Are System Logs and Why They Matter

System logs are detailed records generated by operating systems, applications, and network devices that document events, activities, and changes over time. These logs serve as the digital equivalent of a ship’s logbook—tracking every maneuver, alert, and anomaly. Without them, diagnosing issues or investigating breaches would be like navigating in the dark.

The Core Definition of System Logs

At their most basic, system logs are timestamped entries created by software components whenever a notable event occurs. These events can range from a user logging in, a service starting up, to a failed authentication attempt. Each entry typically includes metadata such as the time, source, severity level, and a descriptive message.

- Generated by the kernel, services, applications, and security modules

- Stored in plain text or structured formats like JSON or XML

- Accessible via command-line tools or centralized logging platforms

“System logs are the first line of defense in incident response.” — SANS Institute

Why System Logs Are Indispensable

From debugging software crashes to detecting cyberattacks, system logs provide critical visibility. They help administrators understand system behavior, verify compliance with regulations, and reconstruct timelines during forensic investigations. In regulated industries like finance or healthcare, maintaining comprehensive system logs isn’t just smart—it’s legally required.

- Enable root cause analysis for outages

- Support audit trails for compliance (e.g., HIPAA, GDPR)

- Facilitate real-time monitoring and alerting

Types of System Logs You Need to Know

Not all system logs are created equal. Different components of a computing environment generate distinct types of logs, each serving a unique purpose. Understanding these categories is essential for effective monitoring and troubleshooting.

Operating System Logs

These are the backbone of system logs, produced by the OS kernel and core services. On Linux systems, they’re typically managed by syslog or journald, while Windows uses the Event Log service.

- Linux: Found in

/var/log/directory (e.g.,messages,auth.log,kernel.log) - Windows: Accessible via Event Viewer under Application, Security, and System logs

- macOS: Uses

Unified Logging System(vialogcommand)

For example, the auth.log file on Ubuntu captures SSH login attempts, making it a goldmine for detecting brute-force attacks. You can read more about Linux logging standards at rsyslog.com.

Application Logs

Every software application—from web servers like Apache to database engines like MySQL—generates its own logs. These logs focus on application-specific events such as query errors, connection timeouts, or transaction failures.

- Web servers log HTTP status codes, IP addresses, and requested URLs

- Databases record slow queries, deadlocks, and access patterns

- Custom apps often use logging frameworks like Log4j or Serilog

For instance, an Apache access log might show repeated 404 errors, indicating broken links or potential scanning activity. Monitoring these system logs helps maintain performance and security.

Security and Audit Logs

These specialized system logs track authentication events, privilege escalations, and policy violations. They are crucial for detecting unauthorized access and meeting compliance requirements.

- Windows Security Event Log records logon types, account changes, and object access

- Linux auditd logs SELinux denials and file access attempts

- Firewalls and IDS/IPS systems generate logs on blocked traffic

Tools like OSSEC or Wazuh can parse these system logs in real time to detect anomalies. Learn more about open-source SIEM solutions at elastic.co/what-is/siem.

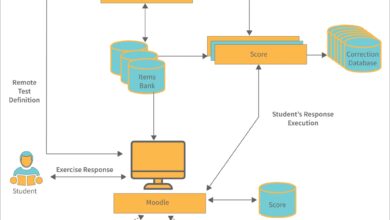

How System Logs Work Behind the Scenes

Understanding the mechanics of how system logs are generated, stored, and processed is key to leveraging them effectively. This process involves several layers—from the initial event trigger to long-term archival.

Log Generation and Event Triggers

Logs are created when software components encounter predefined events. These triggers are coded into the application or system using logging levels such as DEBUG, INFO, WARNING, ERROR, and CRITICAL.

- DEBUG: Detailed information for developers

- INFO: Confirmation that things are working

- WARNING: Indication of a potential issue

- ERROR: A specific operation failed

- CRITICAL: A serious error that may halt the system

For example, a failed login attempt might trigger a WARNING-level entry in the system logs, while a disk full error would be logged as CRITICAL.

Logging Daemons and Agents

Most systems rely on background services—called daemons or agents—to collect and manage logs. On Linux, rsyslog and syslog-ng are common daemons that receive, filter, and forward log messages.

- rsyslog supports TCP, TLS, and database output

- Fluentd and Logstash act as log shippers in modern architectures

- Agents like AWS CloudWatch Agent or Azure Monitor collect logs in cloud environments

These tools ensure that system logs are not only stored locally but also forwarded to centralized repositories for analysis.

Log Storage and Rotation

Raw logs can consume massive amounts of disk space. To prevent this, systems use log rotation—automatically archiving or deleting old logs based on size or age.

- Linux uses

logrotateto compress and rotate logs daily or weekly - Rotated logs are often gzipped and renamed (e.g.,

messages.1.gz) - Policies can be customized to retain logs for compliance periods (e.g., 90 days)

Improper rotation settings can lead to disk exhaustion, so it’s vital to configure them wisely. You can explore logrotate configurations at linux.die.net/man/8/logrotate.

Common Tools for Managing System Logs

With the volume and complexity of modern system logs, manual inspection is no longer feasible. Fortunately, a wide array of tools exists to help collect, analyze, and visualize log data efficiently.

Command-Line Tools for Log Inspection

For quick troubleshooting, command-line utilities remain indispensable. They allow direct access to log files and support powerful text processing.

tail -f /var/log/syslog: Monitor logs in real timegrep "error" /var/log/apache2/error.log: Search for specific entriesjournalctl -u nginx.service: View systemd service logsless +G filename: Navigate large log files efficiently

These tools are lightweight and available on nearly every Unix-like system, making them ideal for on-the-fly diagnostics.

Centralized Logging Platforms

In distributed environments, logs come from dozens or hundreds of sources. Centralized platforms aggregate them into a single interface for unified analysis.

- Elastic Stack (ELK): Elasticsearch, Logstash, and Kibana for indexing and visualization

- Graylog: Open-source alternative with strong alerting features

- Splunk: Enterprise-grade platform with AI-driven analytics

- Fluentd + Loki: Lightweight stack popular in Kubernetes environments

For example, Splunk can correlate system logs across servers to detect lateral movement in a network breach. Explore Splunk’s free trial at splunk.com/en_us/free-trials.html.

Cloud-Based Log Management

As organizations migrate to the cloud, managed logging services have become increasingly popular. These platforms reduce operational overhead and scale automatically.

- AWS CloudWatch Logs captures logs from EC2, Lambda, and RDS

- Google Cloud Logging offers integrated analysis with Cloud Operations

- Azure Monitor Logs (now part of Microsoft Sentinel) provides advanced threat detection

- Datadog and New Relic offer full-stack observability including logs

These services often include built-in dashboards, anomaly detection, and integration with alerting systems like Slack or PagerDuty.

Best Practices for System Logs Management

Collecting logs is only the first step. To derive real value, you must manage them effectively. Following best practices ensures reliability, security, and compliance.

Standardize Log Formats

Inconsistent log formats make parsing and analysis difficult. Adopting a standard format like JSON or using structured logging libraries improves readability and automation.

- Use key-value pairs:

{"timestamp": "...", "level": "ERROR", "message": "..."} - Include consistent fields: user ID, session ID, IP address

- Avoid unstructured text blobs that require regex parsing

Structured system logs integrate seamlessly with tools like Elasticsearch and enable faster querying.

Ensure Log Integrity and Security

Logs are only trustworthy if they haven’t been tampered with. Protecting their integrity is critical, especially in forensic scenarios.

- Send logs to a remote, write-once storage system

- Use TLS encryption when transmitting logs over networks

- Implement role-based access control (RBAC) to prevent unauthorized deletion

- Enable log signing or hashing where possible

“If logs can be altered, they cannot be used as evidence.” — NIST SP 800-92

Implement Retention Policies

How long should you keep system logs? The answer depends on legal, operational, and storage considerations.

- PCI DSS requires 90 days of log retention for payment systems

- GDPR allows data minimization but mandates audit trails

- Internal policies may require 30–365 days based on risk profile

Automate retention using tools like logrotate or cloud lifecycle policies to avoid manual errors.

Using System Logs for Security Monitoring

One of the most powerful uses of system logs is in cybersecurity. They provide the raw data needed to detect, investigate, and respond to threats.

Detecting Unauthorized Access

Repeated failed login attempts, logins from unusual locations, or access during off-hours can signal compromise. System logs from SSH, Active Directory, or web apps capture these events.

- Monitor

auth.logfor multiple failed SSH attempts - Track Windows Event ID 4625 (failed logon) and 4670 (permissions change)

- Set up alerts for logins from high-risk countries

For example, seeing 50 failed SSH attempts from a single IP in one minute is a classic sign of a brute-force attack.

Identifying Malware and Lateral Movement

Once attackers gain access, they often move laterally across systems. System logs can reveal this activity through unusual process executions or network connections.

- Look for unexpected

netcatorPowerShellexecutions - Check for scheduled tasks or services created by non-admin users

- Correlate logs across endpoints using a SIEM

Tools like Sigma rules can help automate detection of known malicious patterns in system logs.

Conducting Forensic Investigations

After a breach, system logs are essential for reconstructing the attack timeline. They help answer: Who did what? When? And how?

- Start with authentication logs to identify initial access

- Trace process creation logs to see what malware ran

- Review file access logs to determine data exfiltration

Forensic analysts often use tools like plaso (log2timeline) to combine system logs with other artifacts for a complete picture.

Challenges and Pitfalls in System Logs Analysis

Despite their value, working with system logs isn’t without challenges. From data overload to misconfigurations, several pitfalls can undermine their effectiveness.

Volume and Noise

Modern systems generate terabytes of logs daily. Sifting through irrelevant entries—like routine INFO messages—can drown out critical signals.

- Implement filtering to suppress low-severity noise

- Use anomaly detection to highlight deviations

- Leverage machine learning models to classify events

Without proper filtering, alert fatigue can cause real threats to be missed.

Inconsistent Timestamps and Time Zones

Logs from different systems may use different time zones or lack synchronization, making correlation difficult.

- Enforce NTP (Network Time Protocol) across all devices

- Store logs in UTC to avoid ambiguity

- Normalize timestamps during ingestion into SIEM

A 5-minute clock drift can throw off incident timelines and confuse investigations.

Log Spoofing and Evasion

Skilled attackers may attempt to manipulate or erase logs to cover their tracks. Some malware even disables logging services.

- Monitor for unexpected service stops (e.g., rsyslog, Windows Event Log)

- Use host-based integrity checks (e.g., Tripwire)

- Send logs to an immutable, external repository

If a system suddenly stops sending logs, that absence itself may be a red flag.

Future Trends in System Logs and Observability

The world of system logs is evolving rapidly, driven by cloud computing, AI, and the growing need for real-time insights. The future lies in smarter, more integrated observability platforms.

The Rise of Observability Over Monitoring

Traditional monitoring focuses on predefined metrics and alerts. Observability goes further by enabling exploration of unknown issues through logs, metrics, and traces.

- Three pillars: Logs, Metrics, Traces (OpenTelemetry standard)

- Enables asking ad-hoc questions like “Why did response times spike?”

- Used heavily in microservices and serverless architectures

Platforms like Grafana Loki and OpenTelemetry are pushing the boundaries of what system logs can do.

AI-Powered Log Analysis

Artificial intelligence is transforming log analysis by automating pattern recognition, anomaly detection, and root cause identification.

- AI models can learn normal behavior and flag deviations

- Natural language processing (NLP) helps summarize log content

- Predictive analytics can forecast failures before they occur

For example, Google’s Chronicle uses AI to process petabytes of system logs for enterprise threat detection.

Edge and IoT Logging Challenges

As devices move to the edge, logging becomes more complex due to limited resources and intermittent connectivity.

- Edge devices may buffer logs locally before transmission

- Compression and deduplication are essential for bandwidth efficiency

- Security is paramount—logs may contain sensitive sensor data

New protocols like MQTT and lightweight agents are emerging to address these needs.

What are system logs used for?

System logs are used for troubleshooting, security monitoring, compliance auditing, performance analysis, and forensic investigations. They provide a chronological record of events that helps IT professionals understand and respond to system behavior.

Where are system logs stored on Linux?

On Linux, system logs are typically stored in the /var/log directory. Common files include syslog, auth.log, kern.log, and messages. Systemd-based systems also use journald to store logs in binary format, accessible via the journalctl command.

How can I view system logs on Windows?

On Windows, use the Event Viewer (eventvwr.msc) to view system logs. Navigate to Windows Logs > System, Application, or Security to see event details. You can filter, search, and export logs for further analysis.

Are system logs encrypted by default?

No, system logs are not encrypted by default on most systems. They are usually stored in plain text, which makes securing the storage location and transmission channels critical. You can enable encryption manually using tools like TLS for log forwarding or disk encryption for log files.

How long should system logs be retained?

Retention periods vary by industry and regulation. Common requirements include 30 days for internal audits, 90 days for PCI DSS, and up to a year for government or financial sectors. Always align retention policies with legal and operational needs.

System logs are far more than just technical records—they are the heartbeat of your IT infrastructure. From diagnosing a simple service crash to uncovering a sophisticated cyberattack, they provide the visibility needed to maintain security, performance, and compliance. As technology evolves, so too will the tools and techniques for managing system logs, but their fundamental importance will only grow. By adopting best practices in collection, analysis, and protection, organizations can turn raw log data into actionable intelligence. Whether you’re a system administrator, security analyst, or developer, mastering system logs is not optional—it’s essential.

Further Reading: